Promotion and Tenure for Digital Scholarship

Laura Mandell

An Open Letter to the Promotion and Tenure Committee at Texas A&M University, Department of English, upon their request for information about how to evaluate digital work for promotion and tenure.

Evaluating Digital Scholarship

The first thing to do in evaluating digital scholarship is to ask the scholar who has produced it to submit it, if at all possible, for peer review. There are several avenues for doing so. First, any electronic scholarly edition can be submitted to the MLA Scholarly Editions Committee for peer review, and junior faculty should be encouraged to do so. The kinds of editions that will pass peer review by the SCE could be very print-like, so the fact that a digital edition did not receive the SCE seal is not completely indicative of its value as research, about which I’ll say more below. Another venue for peer-reviewing is Nineteenth-century Scholarship Online (NINES) for nineteenth-century electronic scholarship. That NINES model is being expanded: my own 18thConnect peer-reviews eighteenth-century digital projects, and three other peer-reviewing organizations are coming into existence: MESA for medieval, REKn for Renaissance / Early Modern, and ModNets for Modernists.

There are also digital journals. In its “Statement on Publication in Scholarly Journals,” the MLA writes:

The electronic journal is a viable and credible mode of scholarly publication. When departments evaluate scholarly publications for purposes of hiring, reappointment, tenure, and promotion, the standing of an electronic journal should be judged according to the same criteria used for a print journal.

If a digital journal has a peer-reviewing system and an illustrious editorial board of premier scholars, articles published in that digital journal should be valued as highly as those published in print journals, and language to that effect should be incorporated into departmental promotion and tenure guidelines.

Practically speaking, we access ALL journals digitally, via JSTOR and Project Muse among other databases, and there is no difference between the value of printed and digital journals due to medium alone. Levels of prestige are no longer measurable by print and digital forms of publication, if they ever were. Thus the faculty who publish in Praxis (an online, peer-reviewed journal hosted by Romantic Circles) are from Berkeley, Princeton, Duke, etc. — institutions that we aspire to emulate. There are differences in prestige among digital journals just as there are among journals in print. External reviewers and period specialists should be asked to rank the journals according to all the ordinary ways of doing so — rejection statistics, contributors’ profiles, editorial board composition, and circulation statistics or other measures of disciplinary centrality — but in thinking about prestige, mode of access should be ignored.

Similarly, materials published digitally that have been peer-reviewed by NINES or 18thConnect pass through editorial boards as illustrious as those of any major press. Not only that, but technological review committees for these peer-reviewing organizations insure that the resources which pass peer review meet the highest standards for digital materials: these are library, archival quality, not web sites of the sort that anyone could mount. Letters from the directors of these organizations tell promotion and tenure (P&T) committees “equivalents”: a database may in fact be more like an article in terms of work and impact than like a book, it may resemble an edition more than argument, or in both cases vice versa.

Finally, prizes and awards can indicate the value of a resource. They are not exactly a substitute for peer review, but they do locate the resource within the field. The Blake Archive won the “Distinguished Scholarly Edition” award for 2003 from MLA — not best DIGITAL edition, but best edition per se.

I would now like to offer some ideas about how to judge digital scholarship in the absence of these more obvious signs by defining it.

Definition of Digital Scholarship

In effective digital research, digital media are not incidental but integral to the scholarly work. Digital scholarship is not, in other words, simply scholarship that takes place in digital media: all the digitized journal articles in JSTOR and Project Muse do that, and in fact all publications either now or will shortly have some kind of digital manifestation, even books. Most e-books might as well be books. In fact, it would be a lot more convenient if they were: the printed codex never needs to be recharged. If publishing a work in paper involves no loss of functionality, then the candidate should have published it in paper, with some exceptions discussed below. The implications of this principle are twofold. First, this knocks out of the running any digital project in which a scholar acts as a “content provider” and drops his or her work off at the door of IT Services. Second, it means that digital scholarship by its very nature requires collaboration, and so we must have peer-reviewing mechanisms that take that into account.

Let me just emphasize the potential catch-22 here: if someone publishes something online that is really, in its core idea, a print artifact, members of P&T committees might be justified in thinking, “This candidate only made a digital edition because no one would publish this work.” But conversely, if a candidate pursues digital scholarship for the sake of finding out what can be done in new media, his or her research requires collaborating with designers, computer programmers — real collaboration, of the sort sponsored each year through summer fellowships funded by the National Endowment for the Humanities (NEH) and sponsored by the online journal Vectors. In that case, P&T committees threaten to say “collaboration doesn’t count.” It is because new media require collaboration that the provosts and deans at the NINES Summer Institute composed a document about authorship: please go to “Whitepapers and Documents” at http://institutes.nines.org.

To get back to the first half of this catch-22, however, it is indeed sometimes the case that “no one would publish” scholarship that deserves to be published. I am technical editor of Lynda Pratt’s amazing e-collection of Robert Southey’s Letters coded and published by Romantic Circles, and I have a great story about why those letters were published digitally. Lynda was being interviewed on the BBC about her work. Linda Bree, acquisitions editor for Cambridge Univ. Press in the field of Romantic Studies, was listening to the interview, and began to walk to the phone to call Lynda with an offer for publication. The interviewer asked Lynda the extent of the collection. It is huge: we have 877 letters tei-encoded and up in Romantic Circles, and we have only published parts I and II of the eight-part edition. Upon hearing Lynda Pratt describe the scope of her edition, Linda Bree of Cambridge UP hung up the phone before calling her. I have had trouble publishing editions of poetess poetry and criticism. They are lesser-known writers and poets, the publishing of whom no press can risk financially. In these cases, the digital edition may in fact closely resemble a print edition, but the editing must be as rigorous as with any print edition.

Editorial rigor involves different things in the digital world than it does in the world of print, though of course the two are connected. Digital Electronic editions will ideally fulfill about 70% of the guidelines for vetting electronic editions offered by the MLA Committee on Scholarly editions, including:

- Use of a coding standard, such as TEI (the set of xml tags and validation schemas offered by the Text Encoding Initiative Consortium);

- Database integrity (ways of determining whether what is in a field is right);

- Solid design principles so that the resource promotes rather than deters thinking;

- Attempts to render the work interoperable with other digital resources.

For something larger than an edition, a digital archive for instance, one needs to ask, does the digital archive make available what one would expect such a resource to provide? (This is comparable to asking, “Why didn’t a book on this topic discuss X?”)

More generally, in assessing digital scholarship, it may help to think of digital research as “curation,” a term that has been much discussed in the digital humanities and library communities recently. Typically, scholars in literary history, for instance, go into the archive and emerge with an argument backed up by particular texts and images that have been winnowed out of a mass of data that the scholar examined. If one thinks about a monograph in a particular subfield of a discipline as a lens for bringing the past into focus by bringing this particular text to the fore and relegating another to background information — a kind of organizing that even occurs in New Literary History, for all its radical leveling of genres and canons — then what a scholar does online in creating a thematic research collection is not so distant from monograph writing after all. Based on a particular reasoned theory, that person selects some materials and deselects others. Whereas in the case of the monograph, this “filtering” is done for the sake of making one particular argument, curating textual data in online research environments involves making possible a number of arguments, all of them nonetheless theoretically inflected by what has been brought into the limelight and relegated to obscurity.

Digital archives are close enough to monographs and editions that judging their value as research can be fairly straightforward.

Here follow two examples of some items that might not look like research or scholarship that in fact ARE such in the field of digital humanities, accompanied by arguments as to why these particular works ought to be valued highly by P&T committees.

A. The HyperCities Project

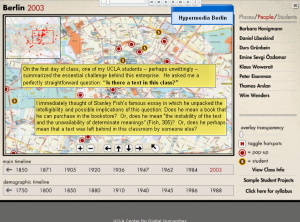

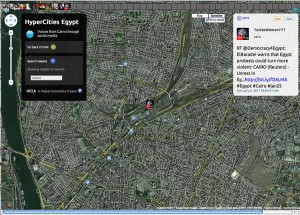

HyperCities director Dr. Presner’s original mapping project involved using Google maps and overlaying historical maps in order to present Berlin, both what one would find there now and the monuments of its past. But the project evolved into a platform that anyone could use to launch and record mappable histories.

Here (above) you can see one of the maps comprising one instance of HyperCities called “HyperCities Egypt.” Here, someone has hooked a map of Cairo up to a twitter stream that was recorded during the demonstrations against Mubarak. A marker on the map shows where a person was who “tweeted” or “re-tweeted” something about events as they transpired, while they were on the streets of Cairo using their cell phones. This twitter stream runs in movie-like fashion (you can see the “slow” and “fast” commands available at the upper-right, top). This particular use of HyperCities provides an amazing resource for historians of current events — including the Tsunami in Japan, for instance, as well as cities swept up by the Arab Spring.

Todd Presner’s work on the HyperCities mapping project has, ever since its first appearance in Vectors, taken the digital humanities world by storm. Vectors is not just a journal that publishes what we call “digitally born” projects, those for which digital media are intrinsic rather than extrinsic. Vectors directs an NEH Fellowship program, bringing scholars for six weeks of the summer to the University of Southern California’s Institute for Multimedia Literacy where they collaborate with computer scientists and graphic designers to create digital resources. As editor Tara McPherson points out, these projects are often later funded by the NEH with other grants, and of course receiving grant funding is one important indicator that digital scholarship constitutes valuable research. Another indicator is the number of speaking engagements to which a scholar is invited in order to present their project: Dr. Presner has been invited to speak about HyperCities worldwide.

As a digital project, HyperCities does precisely what is held up as most valuable about digital technologies in the book Digital Humanities from MIT Press, that he has co-authored with Jeffrey Schnapp, Johanna Drucker, Peter Lunenfeld, and Anne Burdick: it expands the public sphere and allows humanists to participate in it along with others whose concerns, needs, and capacities for selecting and shaping data are considered as equal to if not more important than the concerns of experts. It transforms humanities expertise into a platform for enabling discussion, contestation, and what the education manuals have infelicitously called “life-long learning.” By promoting data curation — which is to say, allowing groups of people to use the HyperCities platform in order to create HyperCities Now, HyperCities LA, and HyperCities Iran — this platform, which originally presented the history of Berlin, gives people a structure for organizing huge amounts of data: twitter, photo, and YouTube streams as they respond to crises of historical moment or document the day-to-day.

It is tempting to see Dr. Presner’s development of this research platform as service rather than research, as merely enabling others to investigate rather than itself being new scholarship. Presner defines the methodological affordances offered by HyperCities, the kind of research that it enables, as “thick mapping,” obviously playing upon Clifford Geertz’s ethnographical notion that was taken up by New Historicists, “thick description.” In the platform’s interactivity with social media, HyperCities promotes interactions among a genuinely global public sphere. This means that software used by and for people all over the world is itself causing people to learn and information to embody a methodological principal coming from the humanities.

By counting a professor’s development of a platform as research, we legitimize as scholarship building software to promote the activities of citizen scholars in the ways that humanists see as valuable. I would like to suggest any de-legitimization of such work, any denigration of interventions in the public sphere by humanities scholars in the academy, is profoundly suspicious, on an ideological level, insofar as such denigrations contribute to marginalizing the humanities and eroding our impact on the world at large.

But the thing to know when such projects emerge is that those software programs and platforms which are capable of harnessing, fostering, and designing massive amounts of non-scholarly, extramural cultural production, using principals that humanists have developed, that get others involved in critical thinking of the sort we perform and teach — doing that takes a huge amount of serious, intellectual work, well beyond the purview of simple technological development. If one defines research in the digital humanities as discovering and creating resources that empower people, direct tasks, and structure information according to articulated and articulable humanities principles, then HyperCities is research in the field of digital humanities. It needs to be recognized as such by those doing research in humanities disciplines with which it overlaps but to which it is not equivalent. Any department wishing to participate in supporting the digital humanities needs to be prepared to value HyperCites along with a monograph published by Duke University Press. In fact, it is getting more and more common to see a digital resource such as the Trans-Atlantic Slave Trade Database spawning or accompanied by a book from a major university press, as well as to see presses undertake to publish digital resources.

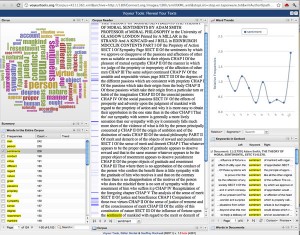

B. Voyant: Reveal Your Texts

Screenshot of Voyant

I wish to give just one more example of an out-of-the-ordinary research project in digital humanities for which someone should be tenured and promoted: software. Geoffrey Rockwell, a philosopher, and Stéfan Sinclair, a literature scholar, developed a series of tools that linguists might use to analyze texts and then visualize the results. These tools are part of TaPOR, a portal to which scholars can go. No one came. Next, after many usability studies and false starts, they developed what they were calling the Voyeur window. (Stéfan is French Canadian and so didn’t know the connotations in English until someone pointed it out to him.) Voyant is a window where you can load up texts and then see them analyzed, immediately, in a number of tools. The most amazing thing about this new software program is that it allows you to embed a window in a digital article, and this window provides a place where live textual analysis is possible. This is one of the first minor ways of changing what an article can do digitally from what it can do in print, but it is a huge step, in my view. Throughout their careers, Rockwell and Sinclair have consistently argued that literature professors can use tools developed by computational linguists for qualitative literary analysis, for close reading. The Voyant window enacts this argument:

- you do not have to be an expert in computational linguistics to launch the tools or understand what they are doing — the interface makes all that possible;

- the text is kept front and center in the interface as that which is most important about any analysis. Once again, we have here digital humanities research buttressed by careful theorizing, software that counts as research precisely insofar as enacts humanities principles.

If you go to http://hermeneuti.ca/voyeur, you will come to a wiki providing two major texts. In one, the tool is explained via an instance of it use in argumentation, “The Rhetoric of Text Analysis;” the other is an instruction manual. Both of these — constituting the equivalent of a book — are major publications in the field of digital humanities. One can see precisely who wrote and revised what on the wiki’s history pages, and therefore once can see how intensive and fruitful co-authoring can be.

How could faculty not in the digital humanities judge the importance of The Rhetoric of Text Analysis and the Voyant Manual to the field of digital humanities as a whole? That two workshops on it were held at DH2010 and DH2011, the Digital Humanities Conferences with a 30 to 40% acceptance rate held at King’s College London and Stanford University, respectively, is a clue, but understanding Voyant’s impact would be easier with the help of an expert in the field of digital humanities. One request that comes up continuously in discussions of rewards for digital scholarship is that P&T committees need access to the names and addresses of experts in the field who could consult with them as well as write external evaluation letters. I’m part of a group called “dhcommons,” and we are working on developing a database of faculty experts in the field.

In closing, I offer the following resources:

Groups that are formulating Guidelines for Promotion and Tenure Committees regarding Digital Scholarship

- The MLA Committee on Information Technology is currently revising their guidelines to make them more up to date. You can see the original document plus suggestions for revision, or offer your own suggestion.

- Profession 2011 offers a cluster of essays on the topic of Evaluating Digital Humanities Scholarship.

- For three of the MLA conferences spanning 2009-2012, the Committee on Information Technology has sponsored workshops for chairs and candidates on evaluating digital scholarship. Resources and information available here: http://wiki.mla.org

- In 2010, NINES was awarded an NEH Summer Institute grant in order to write documents on the topic. Attended primarily by Provosts and Deans during the summer of 2011, the Institute for 2012 will bring in primarily Department Chairs. The documents that have been written so far are available here: http://institutes.nines.org.

Thank you.

Sincerely,

Laura Mandell

Originally published by Laura Mandell in 2012.