Critical Discourse in Digital Humanities

Fred Gibbs

My interest in the role and nature of criticism in the digital humanities grows out of a question that Alan Liu recently asked: Where is the cultural criticism in the digital humanities? Although I’m not convinced that digital humanities needs its own brand of cultural criticism beyond what its constituents would normally do as humanists, the question resonated with me because it made me wonder (with only silence to follow): where is the criticism in the digital humanities?

Sadly, there really isn’t any—a most unfortunate situation for both the innovative projects and people that constitute the digital humanities community. This essay explores the value of creating a critical discourse around scholarly work in the digital humanities. It’s clustered around three main ideas:

- Digital humanists have not created an effective critical discourse around their work.

I don’t mean that we haven’t gone far enough in publicly trashing each other’s projects. Nor do I mean simply that there hasn’t been enough peer review (though it’s true; more on that later). Rather, I mean that digital humanities criticism needs to go beyond typical peer review and inhabit a genre of its own—a critical discourse, a kind of scholarship in its own right—that will help shape best practices and make the value of our work clearer to those both inside and outside the digital humanities community. - We need more theoretical and practical rubrics for evaluating digital humanities work.

Although scholarly communication and peer review have been highly active topics in digital humanities circles for some time, these discussions have not yet produced truly useful evaluative criteria or discourse that appreciate the differences between digital and traditional work (not that there is a strict dichotomy). Everyone in the field knows that the most innovative digital humanities projects cannot be fully evaluated through the traditional, critical, and theoretical lenses of the humanities. But what lenses do we have? How do we know when to use them? How can we help others outside the field use them? - Digital humanities work requires a different kind of peer review to produce effective criticism.

The multifaceted nature of digital humanities work requires a different kind of critique than is typical in the humanities because it puts rather unique demands on both critics and criticism itself. Effective single-author reviews are almost an impossible expectation; useful critical discourse must be based on a new kind of collaborative and community-mediated peer review.

I: towards a critical discourse

We all know that disciplinary boundaries are notoriously difficult to define. Yet they remain recognizable beyond professional titles and departmental affiliations. This boundary problem also gives rise to the question of whether there is any real difference between the humanities and the digital humanities—an interminable debate that need not detain us now. I don’t believe that the digital humanities are fundamentally different from the traditional humanities in any larger epistemological sense, even if one takes the hermeneutics of building (often sloganized as “more hack, less yack”) as a point of departure between them. It will suffice for present purposes to say that the digital humanities are—at least at the moment—different enough from the analog humanities.

Part of what defines a discipline is the rhetoric and aesthetics of its scholarly discourse. Philosophy texts sound different from history texts, which sound different from literary analysis. These differences become especially apparent during collaborative projects. As much as we champion cross-disciplinary work, there is an inherent unease to it, in no small part because it becomes more difficult to evaluate it. Given a particular piece of scholarship: How should one read it? Which criteria should be applied? Of course these lines in the sand are easily blurred and effectively dissolve if one looks too closely. But in the larger view, they exist and have consequences.

As disciplinary rhetoric and aesthetics help characterize and delineate different kinds scholarly work, it’s manifest to no small degree in the way work is evaluated by community consensus, convention, and ongoing efforts to codify practical and theoretical ideals. We have gotten very good at this in terms of traditional disciplinary work. One major way in which digital humanities is in fact separate from the humanities (again, at least for now) is that it requires new ways of evaluating very complex work in terms that are often unfamiliar to most humanists.

One of my favorite illustrations of the difficulty of evaluating digital work comes from William Thomas’s article, “Writing a Digital History Journal Article from Scratch.” The article is from 2007, but describes events that seem almost ancient now, circa 2003. The article describes how analog historians critiqued history scholarship that did not look anything like the traditional journal article? Despite the project’s many virtues, reviewers could only wonder what it did better than the standard practice, and whether “the rewards [of the website] were simply not commensurate with the effort and confusion involved.” Well, it was a long time ago, you say. Agreed. But I’m confident that a similar exercise today would yield significantly similar results, certainly from a non-digital humanities audience, and—given the breadth of the digital humanities community—from plenty who identify as digital humanists themselves.

This is not to criticize the average humanist for not knowing the value of normalized data sets, relational databases, or valid XML. There is indeed more sensitivity to digital work, but the work itself has gotten considerably more complex as well. My point here is that those who do know their value haven’t been particularly clear about why such technologies are useful in the context in which they’re employed. What we might perceive as ignorance on the part of reviewers is at least in part because the rhetoric and aesthetics of digital humanities work is not particularly well established. In other words, the critical sphere has not yet materialized.

Why might this be? One reason for difficulty in fostering a critical discourse might center on the nature of the digital humanities community—a rallying point for many, if not most, self-proclaimed digital humanists. As a community, we’ve been encouraging and supportive, tending to include and welcome everyone with open arms. The “Big Tent” theme of the 2011 Digital Humanities Conference suggests that it’s ongoing. Such an approach has been essential and ultimately very successful in terms of broadening the scope and influence of the field. This should, and hopefully will, continue.

However, such strong community solidarity and support may inadvertently curtail or discourage public criticism; the constantly expanding and amorphous boundaries of digital humanities itself further complicate evaluation. This is not to suggest that we should instead become exclusionary and inwardly hostile. But we can’t be unhappy that tradition-bound humanists don’t appreciate the value of our work when we haven’t really outlined how it’s different and how it should be appreciated. In other words, we haven’t provided a public critical discourse that indicates to those without expertise what work is good and what is not—and thus serves as a compass for practitioners, critics, and outsiders alike.

Some post-talk tweeting prompts me to clarify two important points:

- To argue for a critical discourse is not to suggest that digital humanities projects are inherently flawed as humanistic scholarship (a still far too common misunderstanding about the goals of digital humanities) and therefore must offer more tempered epistemological claims and greater transparency—and should be criticized when they don’t. Good criticism will, of course, address these issues, but that’s not really the point here. Criticism serves a much larger role beyond pointing out flaws (more on this later).

- To suggest that there is an insufficient critical discourse surrounding digital humanities work does not inherently suggest that digital humanists have been generally uncritical in their work. We’ve all criticized projects, approaches, and results behind closed doors; we continually learn from and improve upon past work; we all think carefully about how to do our best work and sound scholarship. But the most useful critical discourse is a public one. Exactly what constitutes the sound scholarship that we want to do (and actually do) is not nearly as apparent to others, especially those outside the digital humanities community, as it should be. It befalls the producers of that good scholarship to explain what is and what is not considered good, and why.

II. the value of digital humanities criticism

The long history of critical theory has well established the various functions of criticism, and need not be rehashed here. Digital humanities projects are not art, of course, and therefore may appear to have considerably less need for criticism, as opposed to simple peer review. I want to argue that a critical discourse of digital humanities work: (1) must be concerned with both interpretation and evaluation; (2) is central to establishing the importance of the kind of scholarly and even cultural work that it does. On the whole, a critical discourse will provide crucial services for an interested audience: establish utility and value, question blemishes and flaws, and identifies sources, commonalities, and missed opportunities. Criticism points out true innovation when it’s perhaps not obvious that paint slopped onto a canvas is actually worth thinking about. It points out when success claims point to little more than—to adapt a phrase from Michael Joyce—technological frosting on a stale humanities cake.

Haven’t we all seen intriguing, if not jaw-dropping, visualizations that made virtually no sense? Of course the real thrill of taking these in is to recognize the beauty that some obscene amount of data could be viewed in a small space, possibly interactively. Anyone who’s even thought about creating visualizations from even well standardized data knows how difficult it is. The necessary technical triumphs notwithstanding, we need to discuss (for example) the value of being able to automate the creation of such visuals apart from the communication that happens as a result of their design. We need to distinguish a methodological triumph from an interpretive one. Imagine how an explanation of the creation of such visuals could ease fears of black-box manipulation. This is just one instance where a critical discourse for digital humanities would be far more valuable than grant applications that sell potential work and post-facto white papers that champion whatever work got done. We need more than traditional journal articles that describe the so-called “real” humanities research that came out of digital projects.

Perhaps most importantly, as I’ve already suggested, criticism serves a crucial signaling function. Matthew Arnold in his The Function of Criticism at the Present Time defined criticism as “a disinterested endeavor to learn and propagate the best that is known as thought in the world” [1]. One might easily and rightfully disagree with what critics like Arnold would have categorized as “the best,” but I think that his statement pretty well describes what we need to do. The staggering rate of digital humanities project abandonment has caused some alarm of late. One reason is that most academics aren’t that good at marketing beyond their disciplinary peers. Another reason is that it’s unclear what is worth emulating or learning from—especially for those new to the field. Criticism selects and propagates projects that deserve merit and to serve as models. To continue with the previous example, we need criticism that praises technological achievement of visualizing, while condemning poor design practices; we need criticism that lauds the interpretive potential while critiquing the potential transferability and reusability of the methodology.

Of course criticism has to be good and original, not dogmatic. Irving Howe, the influential cultural critic from the mid-twentieth century, remarked that power of insight counts far more than allegiance to a critical theory or position, as no method can give the critic what he needs most: knowledge, disinterestedness, love, insight, style.[2] It’s not easy to have these! But criticism that reflects these talents performs extremely valuable scholarly work—work that goes far beyond the original project and makes it even more useful. Such criticism is especially good at establishing and debating terms of how to analyze a particular work. Discourse of critique is where new standards get hammered out. It’s the connective tissue of projects that pronouncements from on high simply cannot have.

One reason I’m evoking somewhat old-fashioned critics is because of the way in which evaluation and interpretation came together in their criticism. The way they hammered out a new kind of critique to judge, evaluate, and make sense of literature seem apropos to the new forms, structures, and processes digital humanities work, which is often fundamentally different than previous kinds of scholarship. For precisely that reason, it requires a different approach to evaluating and critiquing it. We need a critical discourse situated between contemporary critical theory that insists on interpretation and earlier schools of criticism in which evaluation played a much larger role. We need both.

The New Criticism of the 1940s and 50s largely relieved the critic from aesthetic debates about whether something should be judged good or bad. Critical emphasis refocused on interpretation of a self-contained object. This is not what we want to do with digital humanities criticism. Howe, a member of the “New York Intellectuals,” advocated connecting literary texts to their political and historical circumstances—an admonishment echoed by several pieces in the recent Debates in the Digital Humanities—though not aimed at particular projects—and such concerns should inform our public engagement with digital humanities work.[3] The interpretive element remains important because what the community can do with the results of a digital humanities project is, like art, often outside what a creator or project team might have envisioned for it—and this is where interpretation becomes important for multivalent digital humanities projects. What does it mean that a database has been structured in a certain way? What are the larger consequences for one design over another? How does a certain project push the boundaries of what we consider acceptable digital humanities work? How can new analytical processes or methodologies be applied in different contexts? These are subjective and interpretive questions that we must openly discuss.

As scholarship rather than art, the evaluative component that appealed to critics like Arnold must feature in any useful critical discourse. Contra Wimsatt and Beardsley, authorial intention must be considered, as formulated in Goethe’s three questions for the critic: What was the aim? How well was it carried out? Was it worth it? Evaluative critiques are important because criteria for neither digital humanities work nor its evaluation have been well established. In broad terms, we might fruitfully follow literary critic Barbara Herrnstein Smith, who encourages us to evaluate according to integrity, coherence, boundaries, categories to which it belongs, and features, qualities, and properties that make it what it is.[4] And we must do this not according to personal and subjective experience, but with respect to best practices as understood by the critic. A critical discussion of goals and outcomes will shape practices far more efficiently than decontextualized white papers or manifestos disconnected from implementations complete with their messy details and devil(s) lurking therein.

So what do we look for?

This last year in particular has seen much energetic rethinking of scholarly publishing and evaluative criteria for digital work. For example, the MLA has outlined types of digital work, as well as guidelines for evaluation. To their credit, the MLA has been one of the most visible scholarly societies in starting and facilitating such discussions. However, here and elsewhere, the focus has remained on getting non-print work recognized and promoting the value of process over results.

These were important arguments to make (and to continue in some cases), but we must go beyond that now as well. Even if digital work is more acceptable, we haven’t really created sufficient guidelines for evaluating digital work (broadly defined) on its own terms. Somewhat better in this regard are the NINES guidelines for peer review, which call attention to usability and code. But overall these guidelines are at once too general to enable rigorous criticism, and too specific to NINES projects. In both cases, though, the suggested guidelines for evaluating digital work are not all that different from those for evaluating analog work. On one hand, that’s exactly their point! On the other hand, it’s perhaps a bit counter-productive because it doesn’t sufficiently consider what’s unique about digital work.

I’d like to outline a few very general criteria that might be broadly applicable to digital work, as disparate as it can be. I make no claims of completeness here.

Transparency

Can we really understand what’s going on? If not, it’s not good scholarship. “I used a certain proprietary tool to get this complicated visualization that took a gazillion hours to encode in my own personal schema–I won’t bore you with the details–but here’s what I learned from the diagram…” This cannot be considered good scholarship, no matter what the conclusions are. It’s like not having footnotes. Even though we don’t check footnotes, generally, we like to think that we can. So it’s natural to expect resistance when the footnote resembles a black box. Digital humanists have gained some traction in encouraging others to value process over product. Transparency helps us to evaluate whether a process is really innovative or helpful, or if it’s just frosting.

Reusability

Can others export the methodologies, code, or data and apply them to existing or future projects? This embodies so much of what is central to the ethos of the community; we’re always experimenting, always looking for better ways of doing things. The criterion of reusability creates an interesting gray area for generalized tools created by a project that aren’t specific to that project, the humanities, or anything else—scripts that tidy up predictably messy data, for example. Are these to be shared and discussed? For now, absolutely! It’s part of the effort, as Arnold suggested, to signal to our peers what proves most useful (hopefully, with justification). Obviously, not all project components or ideas are reusable. But discussion about what must be and what cannot be are important theoretical positions that will get worked out in a vibrant critical discourse about both concrete work and in abstract theoretical terms.

Data

Because most if not all digital humanities projects rely on data, it simply must be available—and not only for testing algorithms or verifying research results, but also for combining with other data sets and tools. Exactly how data should look is far from obvious. If nothing else, discussing a project’s use of data will encourage conversations about interoperability, appropriate standards, ownership, copyright, citation, and so on. These issues are becoming more relevant than ever as we create new research corpora that bridge historically separate disciplines and archives. It’s simply unacceptable to keep our data hidden—at least data that supports published work—with a wave of the hand: “well, I cleaned this up and standardized it, and reformatted it … but I’m going to keep this work invisible and hoard it.” It’s like footnotes without page numbers.

Design

By “design,” I really mean a project’s underlying organizing principles. Our critiques must address why a particular design strategy is the best one or not, regarding both presentation and infrastructure. One challenge here is that academic convention dictates that—it least in terms of scholarly content—we privilege content over form. On one hand, good web design separates these; on the other hand, as McLuhan pointed out long ago: the medium is the message. Our attitudes about legitimacy and trust hinge on aesthetics in a relatively new way—almost a new kind of social contract between resource creators and users. Infrastructure is no less important, and perhaps less hidden than we suppose. Our critiques of design must also consider the decisions behind database design, encoding, markup, code, etc.

III: New kinds of peer review / criticism

My point here isn’t just that digital humanities projects should embrace these values. Many already do. My point is that they need to get critiqued explicitly and publicly. But where and when do we do this? How does it fit with existing review and evaluative conventions?

As everyone knows, the nature of publishing has changed; we now do many digital projects that are never really done or officially published—at least not with an imprimatur of review and vetting. This means that the typical review process has been turned on its head. Getting a grant is too often an end in itself, taken to justify even the completed work. But this signing-off by the scholarly community happens before any work gets done. While traditional scholarship (books and articles) is held accountable to its stated goals and methodologies (as far as the medium permits), digital projects have not had that accountability from the scholarly community. This is a grave disservice in two ways: projects learn less from each other, and projects remain isolated from relevant scholarly discourse.

It may sound as if I’m simply advocating for more peer review, as did a Chronicle of Higher Education article “No Reviews of Digital Scholarship = No Respect,” which argued that scholarly societies and editors of traditional journals need to step up and encourage this work. Indeed, at least for now, peer review remains necessary for legitimization and certainly we should have more of it for digital humanities work. However, while change on the part of societies and journals would be nice, why should a few gatekeepers dictate the terms?

More importantly, I’m not convinced that getting a formal review and thus the imprimatur of serious scholarship is enough. We need a fundamentally different kind of peer review. Just as the nature of publishing is changing, the nature of peer review must evolve, especially for large, collaborative digital humanities projects, but even for small, individual ones as well. Digital humanities work requires a different kind of criticism than most academic criticism because of the very nature of the work. Digital humanities projects often serve much broader audiences and embody interdisciplinary practices in a way that eludes traditional models of critique.

I mentioned earlier the unease of situating interdisciplinary work in professional pigeonholes. As a way of fostering useful criticism, peer review needs be fundamentally collaborative in two ways:

- More people to review individual projects. How many people can really critique the various facets of a digital humanities project, when they range from graphic design, interface design, code, encoding standards, etc. Even if one could, it’s a herculean task not befitting the typical lone reviewer.

- Coordinated efforts to solicit and publish these critiques. Given the nature of complex publishing models of digital humanities projects, why not move away from editor-mediated peer review, which minimizes the public visibility?

So far I’ve discussed what happens after a digital humanities project is done. Ideally, projects could build into project timelines opportunities to solicit critiques, which hopefully will be feedback that avoids laudatory platitudes but rather shapes the project in productive, if challenging, ways. These might well be published as part of the project, which would help foster a vibrant critical discourse around the work being done. Good criticism will, of course, be applicable well beyond any particular project and constitutes scholarly work in itself.

Digital humanities work is often iterative in nature, and the review process needs to be as well. Just as digital humanities projects are inherently more public than the typical humanities project, everyone benefits when their critiques are more public. Project funders must prioritize and encourage public critiques as a way of establishing scholarly value and consider these critiques as part of funding decisions. A project without accountability, without connectedness, without critique, simply fills another plot in the digital humanities project graveyard.

One arena in which we might cultivate a vibrant and sustainable discourse is in the classroom. Our digital humanities courses need to explicitly teach critical methods for the unique issues in confronting digital humanities work. Both theory and practice is essential here. We must have more than gossipy complaints that don’t go beyond the classroom walls, or vapid reviews that fill the backs of most printed journals. Good criticism is very difficult. Students need practice pointing out what’s good and lacking in a project in a way that benefits both the project and the average humanist who needs to understand it. Such an environment makes it easy to get practice working in review teams that can leverage group expertise to address the variety of elements within a complex project.

IV: lastly

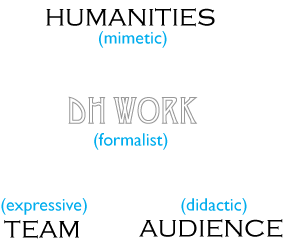

The criteria I mentioned earlier (transparency, reusability, data, design) operate in a larger theoretical context that we must consider as well. This might be profitably represented in an adaptation of a well-known diagram of criticism from M. H. Abrams, which shows four proximal spheres of criticism that might guide our approach. The formalist critique examines the form of the work itself, namely how well its structure, form, and design serve its purpose in the context of similar works. Didactic criticism focuses on the extent to which the work can reach, inform, and educate an audience. In the original diagram, mimetic criticism would address the extent to which a work of art mirrors something larger about the world. For digital humanities criticism, it might evaluate how well digital humanities work accomplishes or facilitates humanistic inquiry. Lastly, the expressive critique discusses how well the work reflects the unique characteristics and style of the creator(s).

Of course these critical spheres are not entirely separate. In addressing each of them, for instance, we must remember that code and metadata, as well as data and whatever structures govern it, are not objective entities but are informed, attacked, and defended by ideology and theory. While these spheres of criticism might be applicable to humanities research generally, they are especially crucial for contemplating multifaceted digital work that is so often misunderstood. Furthermore, these different areas of critical focus provide opportunity for more critical theory in the digital humanities that grows out of its own work and also from further afield, drawing on critical methods from those working in new media and history of technology, as well as platform, hardware, and software studies.

Evaluative and interpretative public critique has much to offer the digital humanities. It’s no panacea, of course. But it does seem like it could soothe the growing pains of a relatively new field or discipline or community, or whatever digital humanities should be called—especially since it depends on technologies and processes that change almost from day to day. But its problematic identity and transitory nature should encourage us to recognize the importance and power of a critical discourse—one that responds to particular projects—that will help explain, shape, and improve scholarship that demands new kinds of products and processes of engagement.

Originally published by Fred Gibbs on November 4, 2011. Revised March 2012.

This post is a moderately revised version of a presentation for MITH’s Digital Dialogues series. This version has benefited from the thoughtful questions and comments that followed, as well as from the insightful critique of Natalia Cecire.

- [1]Matthew Arnold, The Function of Criticism at the Present Time (1864; New York: Macmillan, 1895), 81.↩

- [2]Irving Howe, “The Conduct of Criticism: How We Do What We Do,” Salmagundi 85/86 (1990): 55-70.↩

- [3]Matthew K. Gold, ed. Debates in the Digital Humanities (Minneapolis: University of Minnesota Press, 2012).↩

- [4]Barbara Herrnstein Smith, “Contingencies of Value,” Critical Inquiry 10.1 (1983): 1-35; 18.↩