Mapping Texts: Visualizing American Historical Newspapers

Andrew J. Torget and Jon Christensen

Mapping Texts is an ambitious project with a simple mission: to experiment with new methods for finding and analyzing meaningful patterns embedded within massive collections of digitized historical newspapers.

Why do we think this is important? Because, quite simply, historical newspapers are being digitized at a rate that is rapidly overwhelming our traditional methods of research. The Chronicling America project, for example, recently digitized its 5 millionth newspaper page, and predicts that more than 20 million pages will be available within a few years. Numerous other programs are also digitizing newspapers at a rapid pace worldwide, making hundreds of millions of words from the historical record readily available in electronic archives that are reaching staggering proportions.

Such enormous collections offer tantalizing new possibilities for humanities research. Yet without tools and methods capable of sifting meaningful patterns from such massive datasets, the challenges of working with digitized newspapers are becoming equally overwhelming. Researchers, for example, too often find themselves confined to exploring such archives through basic text searches (which, when they produce several million hits, offer too many results to analyze in any meaningful way by hand). And scholars invariably have no ability to evaluate basic metrics (such as how much data is available from a particular time and place, or the quality of the OCR — optical character recognition — digitization process) about a given online collection. Harnessing the promise of digitized newspapers, in other words, requires building more transparent windows into the tremendous wealth of such archives.

Our purpose with Mapping Texts, then, has been to experiment with developing new methods for enabling scholars to sift, sort, and explore digitized historical newspapers for their research. To that end, we have attempted to combine the two most promising methods for analyzing large-scale datasets: data- and text-mining (for discovering meaningful patterns embedded in large bodies of text) and data visualization/mapping (for grouping, discovering, analyzing, and making sense of those patterns). Working with a collection of about 232,500 pages of digitized historical newspapers, we produced two interactive interfaces:

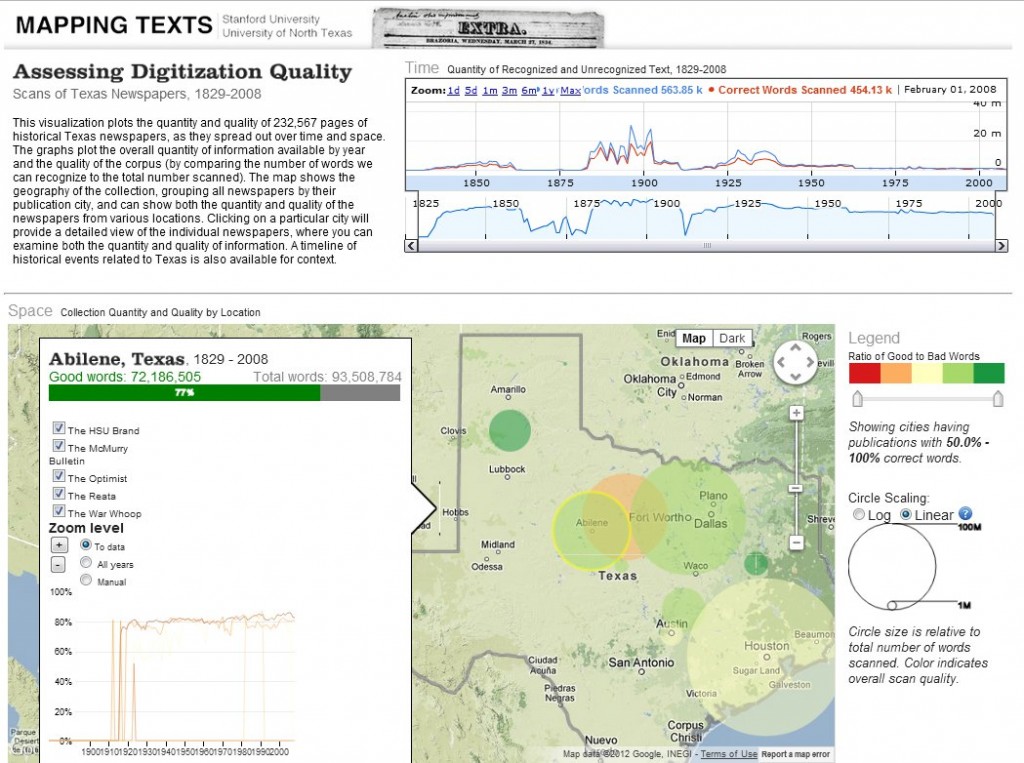

1. “Mapping Newspaper Quality” maps a quantitative survey of the newspapers, plotting both the volume and quality (OCR recognition rates) of information available in the digitized collection. Through graphs, timelines, and a regional map, users can explore these metrics for any particular time period, location, or newspaper. Clicking on individual newspaper titles also allows users to jump from “distant” to “close” readings of the texts.

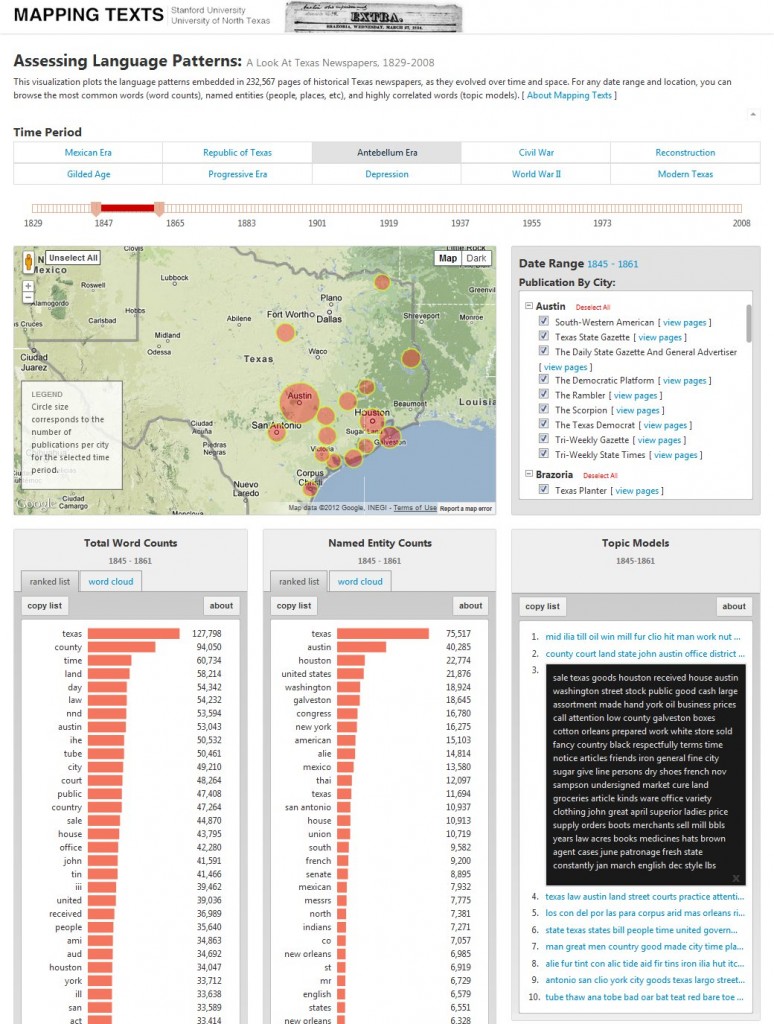

2. “Mapping Language Patterns” maps a qualitative survey of the newspapers, plotting major language patterns embedded in the collection. For any given time period, geography, or newspaper title, users can explore the most common words (word counts), named entities (people, places, organizations), and highly correlated words (topic models), which together provide a window into the major language patterns emanating from the newspapers. Clicking on individual newspaper titles also allows users to jump from “distant” to “close” readings of the text.

These two interfaces are built on top of the large archive of historical newspapers digitized by the University of North Texas (UNT) as part of the Chronicling America project and UNT’s Portal to Texas History. We selected this archive for a number of reasons: with nearly a quarter million pages, we could experiment with scale; the newspapers were digitized to the standard set by Chronicling America, providing a uniform sample; the Texas orientation of all the newspapers gave us a consistent geography for our visualization experiments. It also represented the entire corpus available to us — or any researcher — accessing UNT’s digital newspaper archive when we began the project during the fall of 2010.

The project was, at base, an experiment to see what we could discover about the breadth and depth of a single electronic newspaper archive, and what that might tell us about other similar archives. The project’s interfaces are meant to be used in tandem, with the hope that researchers will combine insights from the two in order to better sift through these collections and perhaps discover previously hidden connections in the newspapers.

This work depended heavily on collaborations between scholars at UNT and Stanford University — UNT’s Rada Mihalcea and Stanford’s Geoff McGhee, in particular. Please see the project website for a full listing of the team behind the project.